ProtoChat:

Supporting the Conversation Design Process with Crowd Feedback

Abstract

Similar to a design process for designing graphical user interfaces, conversation designers often apply

an

iterative design process by defining a conversation flow, testing with users, reviewing user data, and

improving the design.

While it is possible to iterate on conversation design with existing chatbot prototyping tools, there

still

remain challenges

in recruiting participants on-demand and collecting structured feedback on specific conversational

components.

These limitations hinder designers from running rapid iterations and making informed design decisions.

We posit that involving a crowd in the conversation design process can address these challenges, and

introduce ProtoChat,

a crowd-powered chatbot design tool built to support the iterative process of conversation design.

ProtoChat

makes it easy

to recruit crowd workers to test the current conversation within the design tool. ProtoChat’s

crowd-testing

tool allows

crowd workers to provide concrete and practical feedback and suggest improvements on specific parts of

the

conversation. With the data collected from crowd-testing, ProtoChat provides multiple types of

visualizations to help designers analyze and revise their design. Through a three-day study with eight

designers, we found that ProtoChat enabled an iterative design process for designing a chatbot.

Designers

improved their design by not only modifying the conversation design itself, but also adjusting the

persona

and getting UI design implications beyond the conversation design itself. The crowd responses were

helpful

for designers to explore user needs, contexts, and diverse response formats. With ProtoChat, designers

can

successfully collect concrete evidence from the crowd and make decisions to iteratively improve their

conversation design.

System

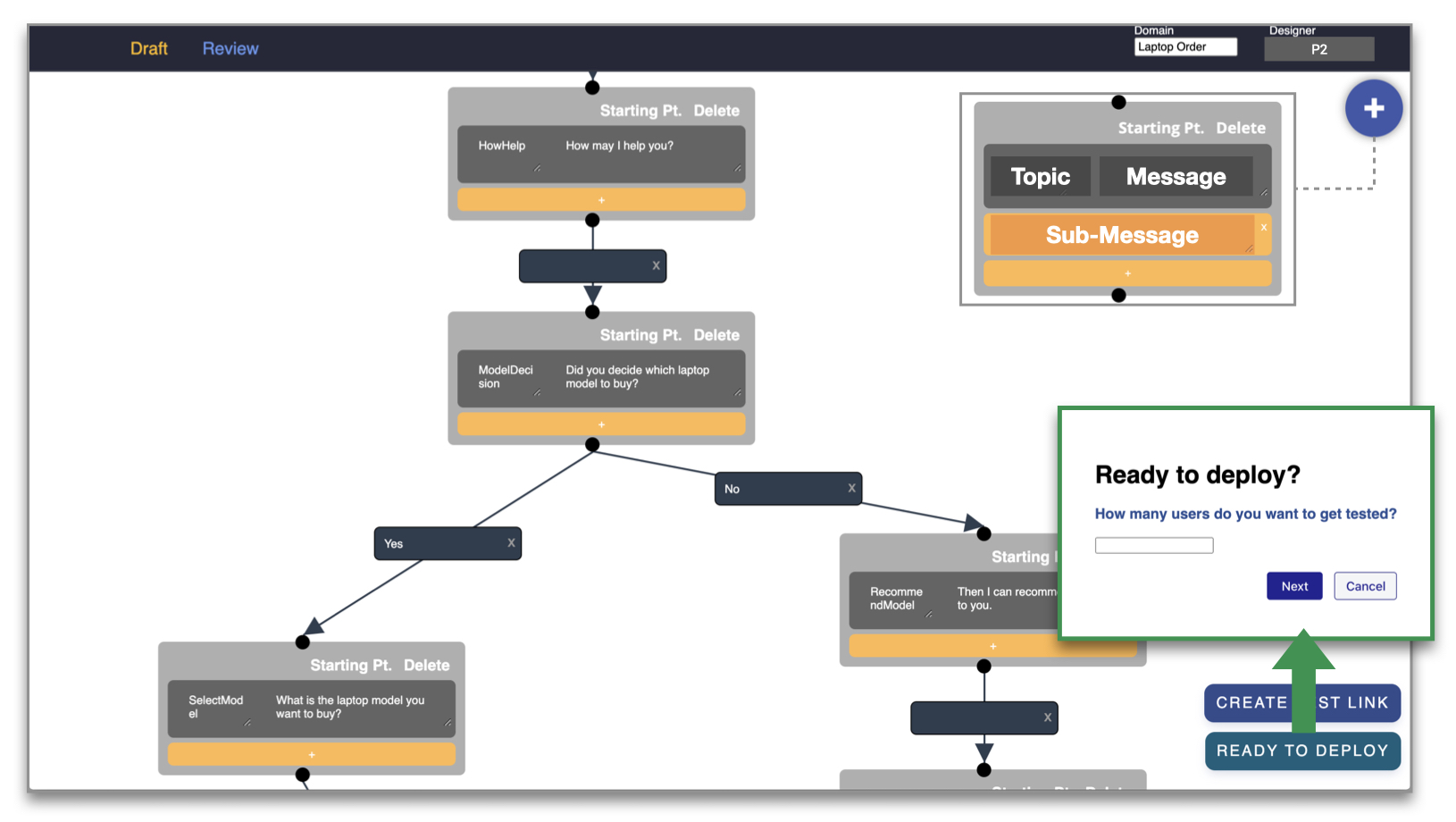

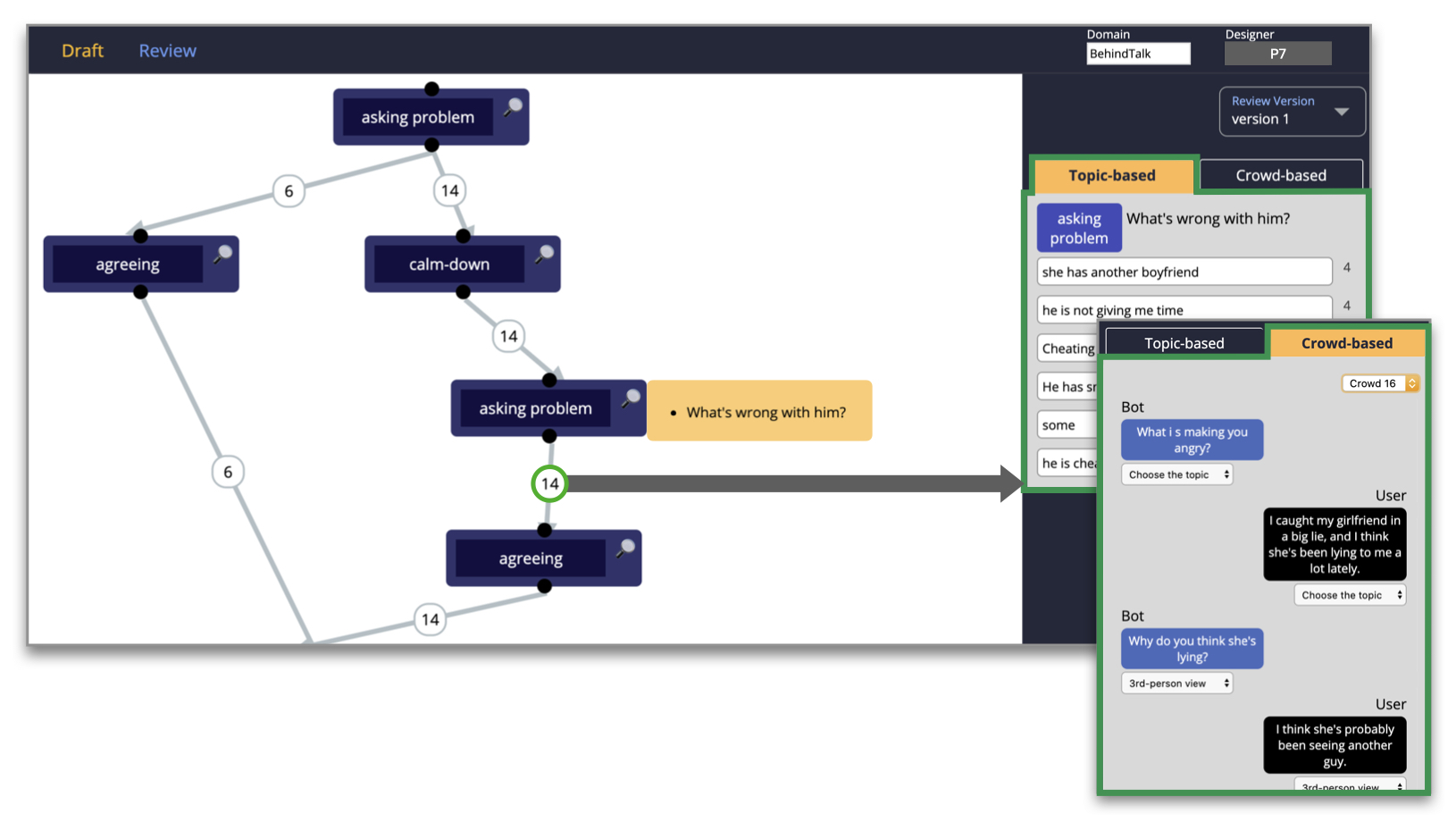

ProtoChat supports designers to rapidly iterate on the conversation design by allowing designers to

create

conversation sequences, quickly test the designed conversation with the crowd, analyze the crowd-tested

conversation data, and revise the conversation design. These features are manifested in two main

interfaces:

the designer interface and the crowd-testing interface.

-

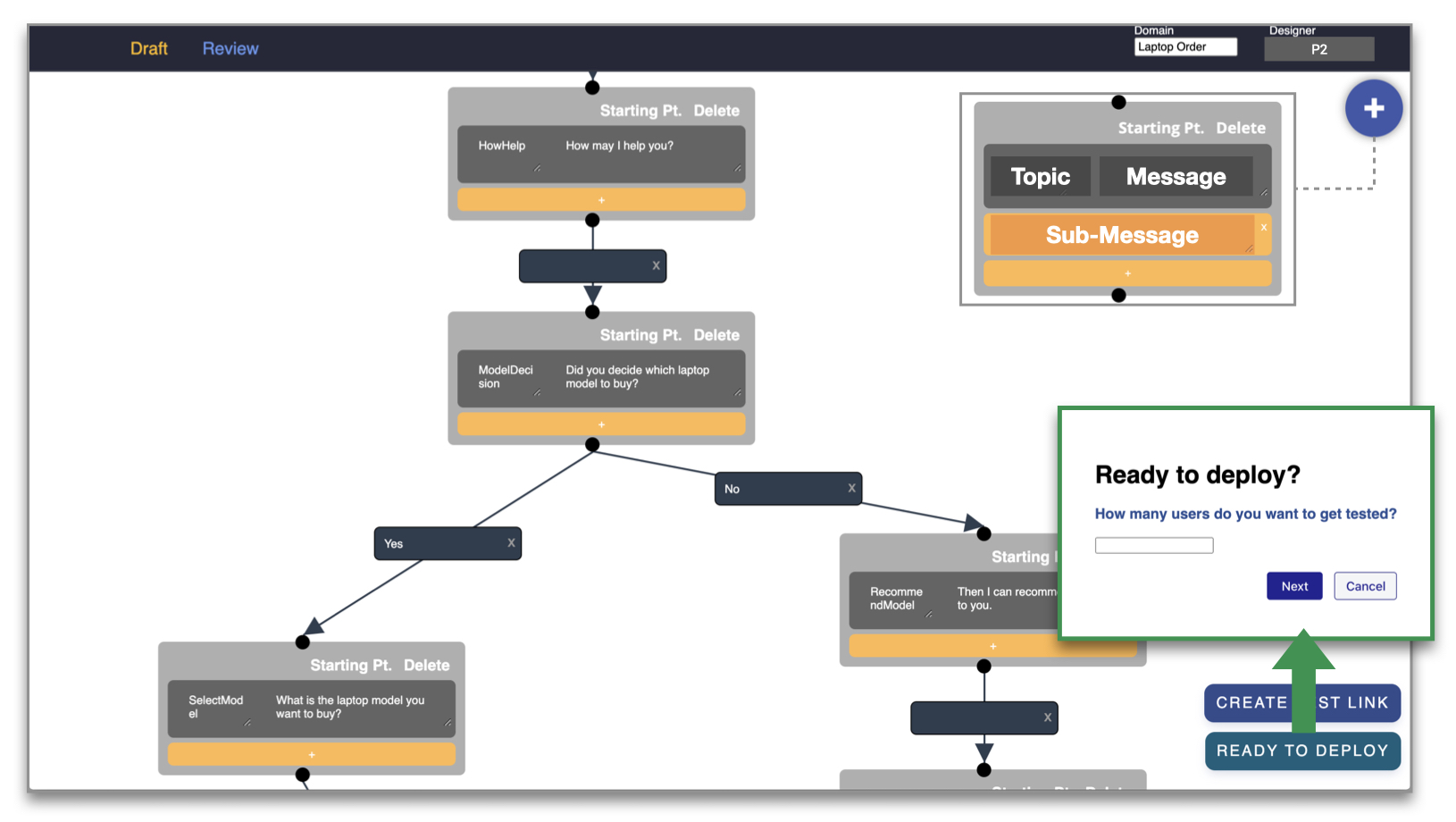

Design Interface

Designers can draft, test, and deploy their conversation.

-

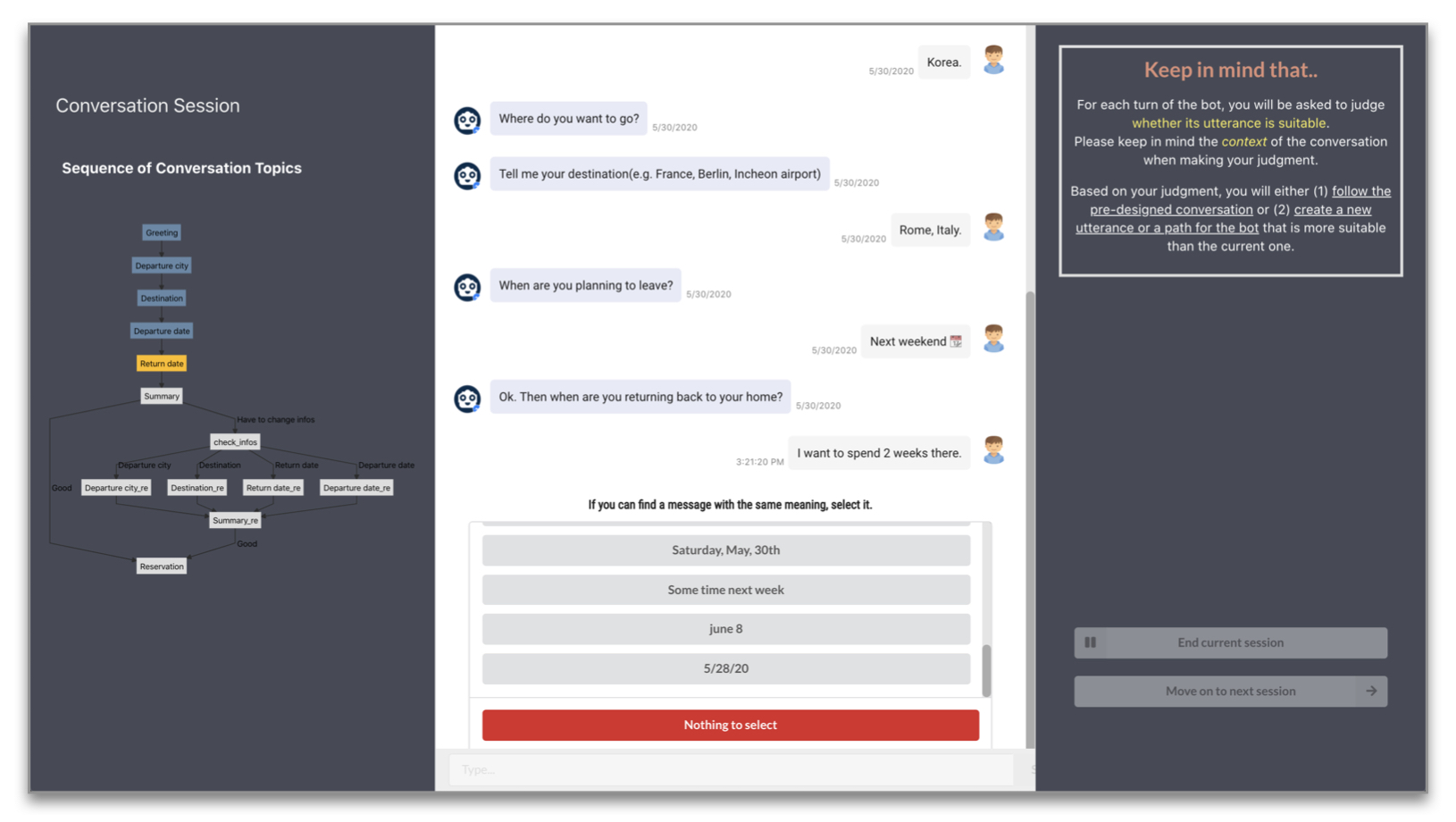

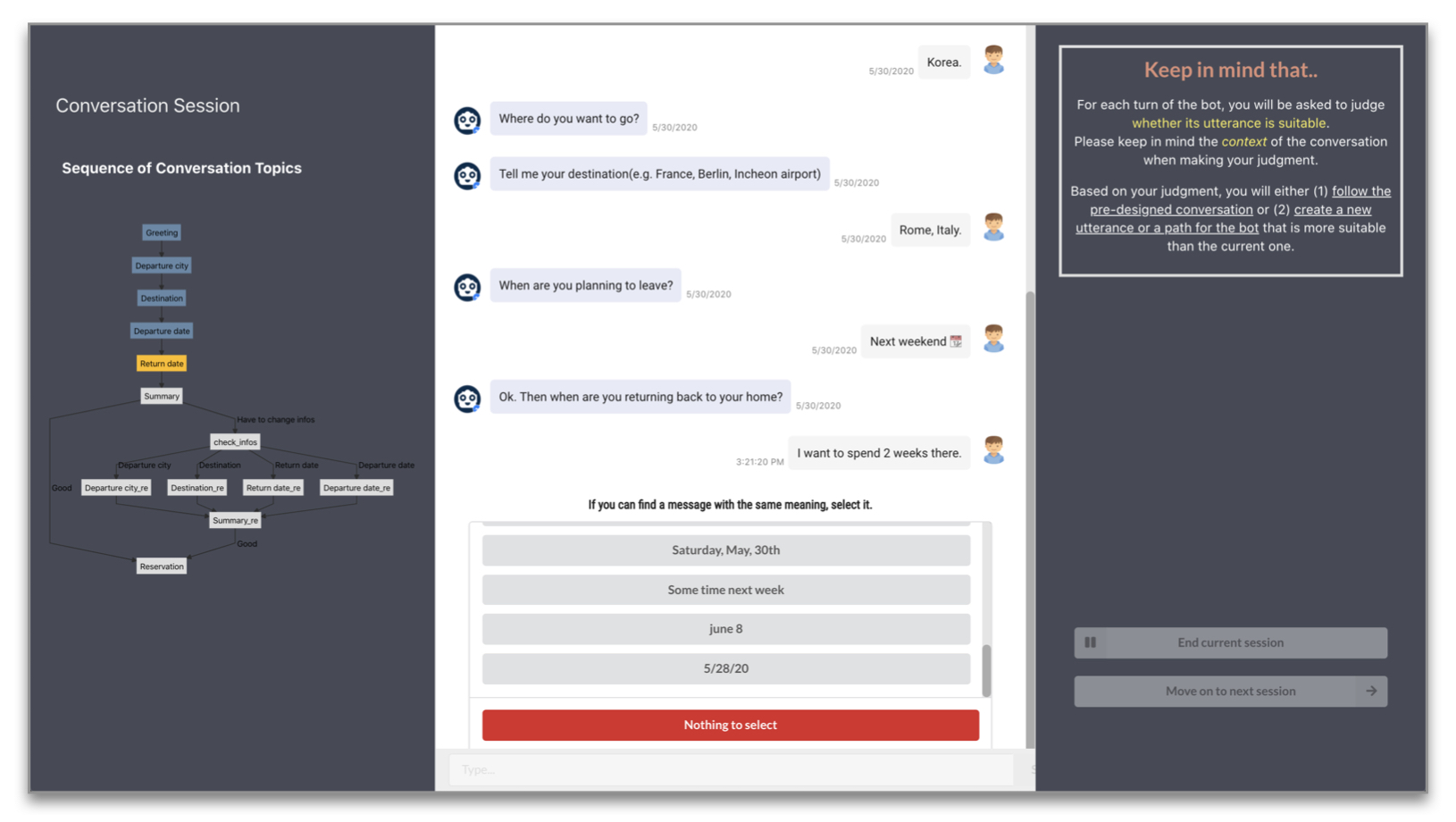

Crowd-testing interface

Crowd worker can perform three kinds of interactions within testing phase.

-

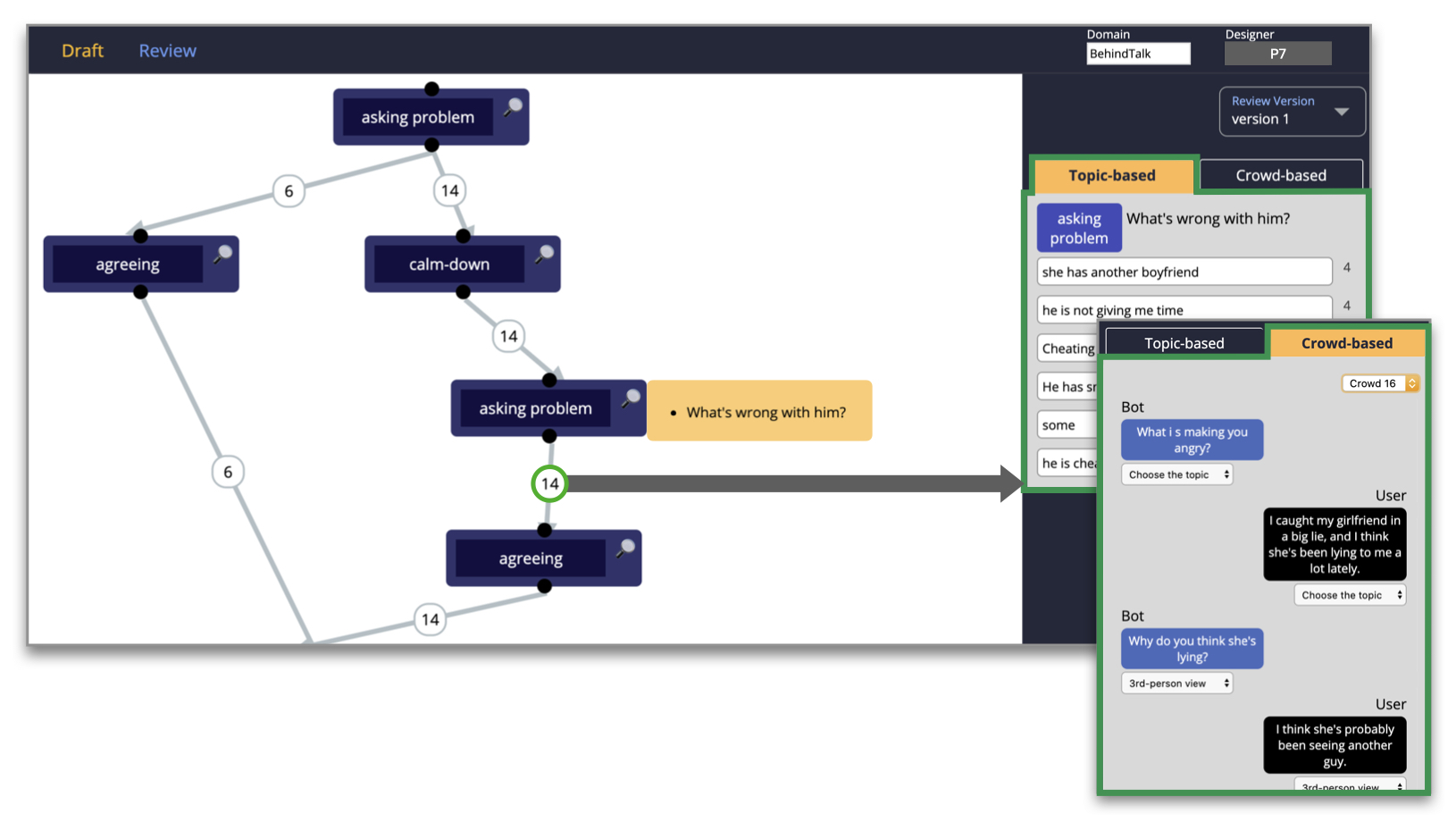

Review interface

Designers can browse and analyze the data from the crowd-testing.

Crowd Interactions

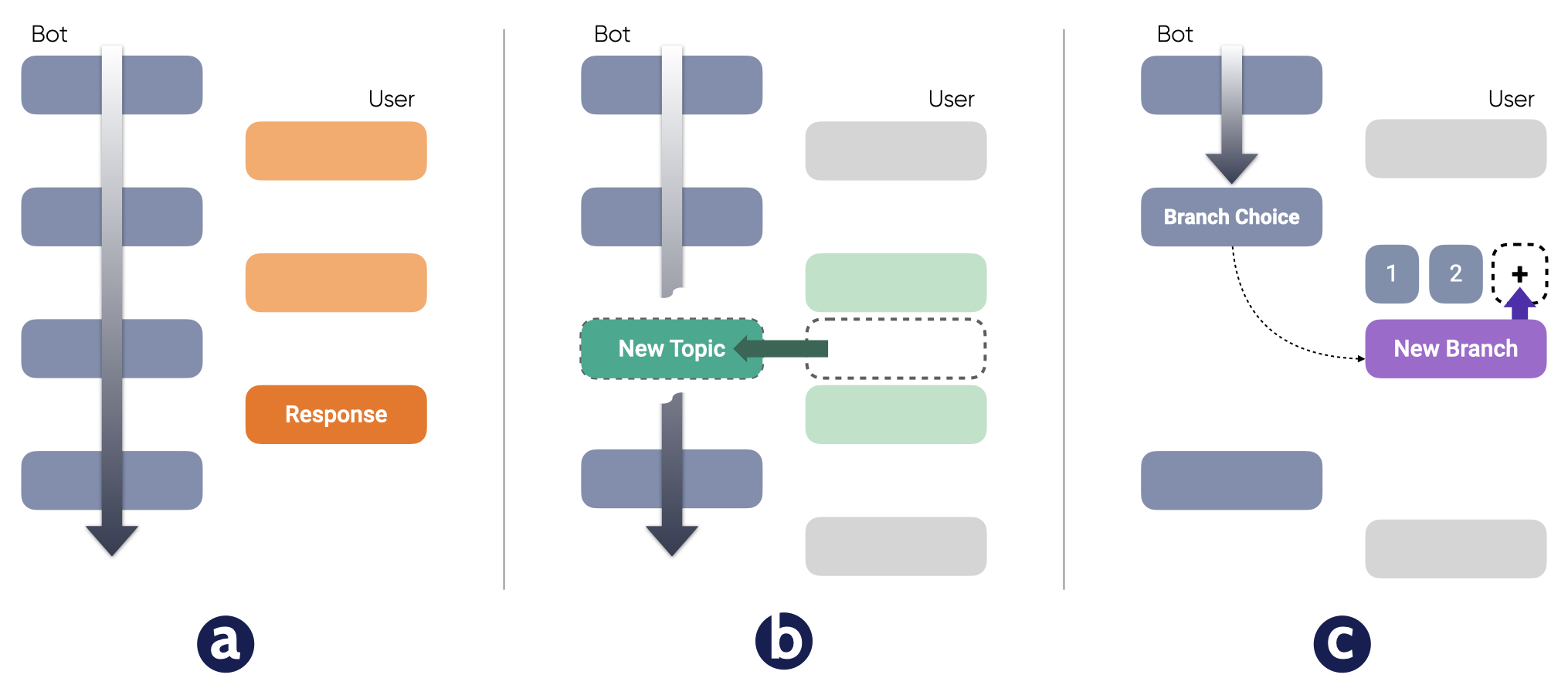

When using the crowd-testing interface, crowd workers can perform three kinds of interaction in the

designed conversation: (a) follow the conversation flow and add a user-side response, (b) add a bot-side

utterance, or (c) add a branch on the user’s side.

Result

3 patterns of analyzing crowd responses

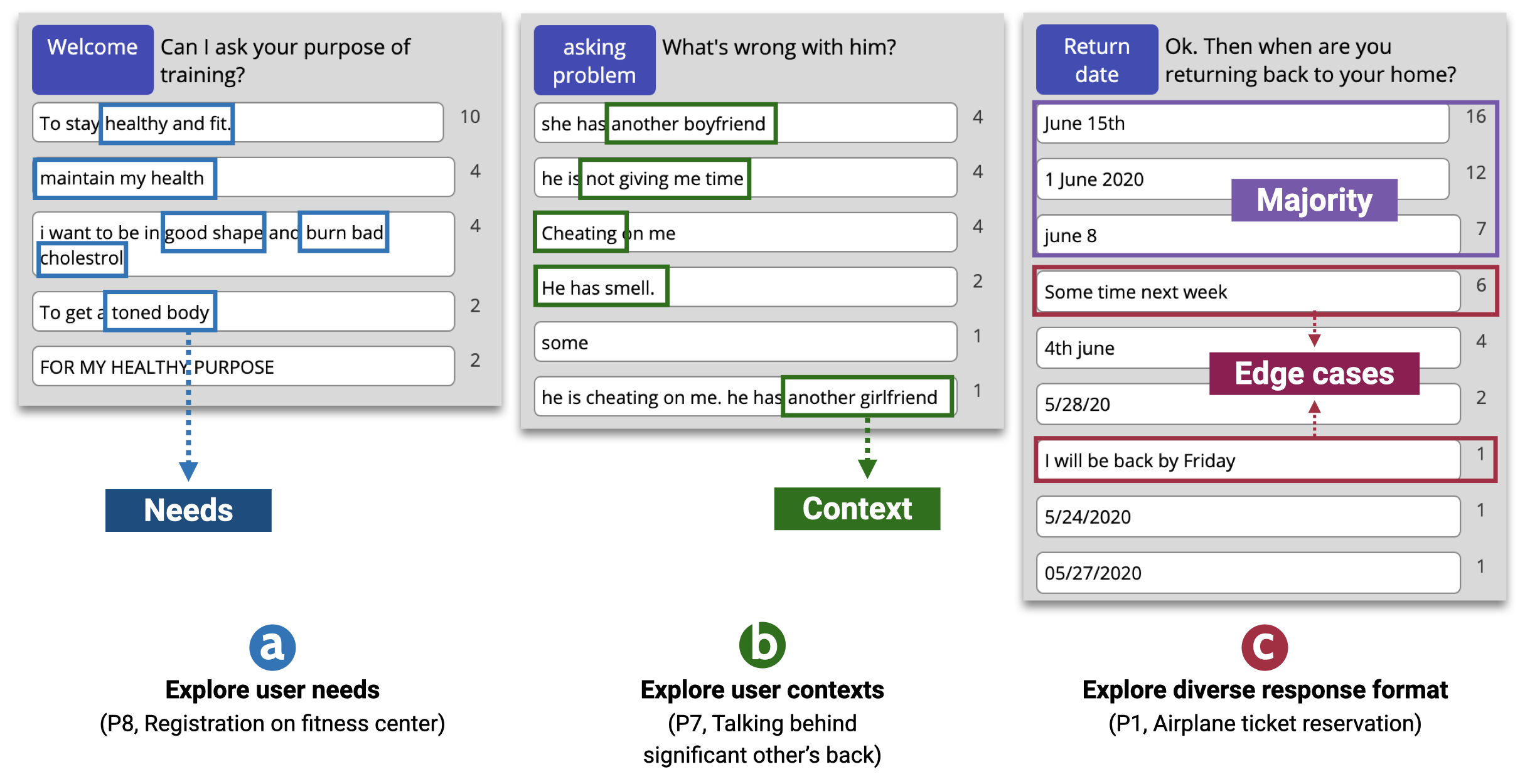

By analyzing the crowd responses, designers could explore the user needs, contexts and diverse response

format which are essential to know for the successful conversation design.

5 design improvement patterns with collected evidence from the crowd

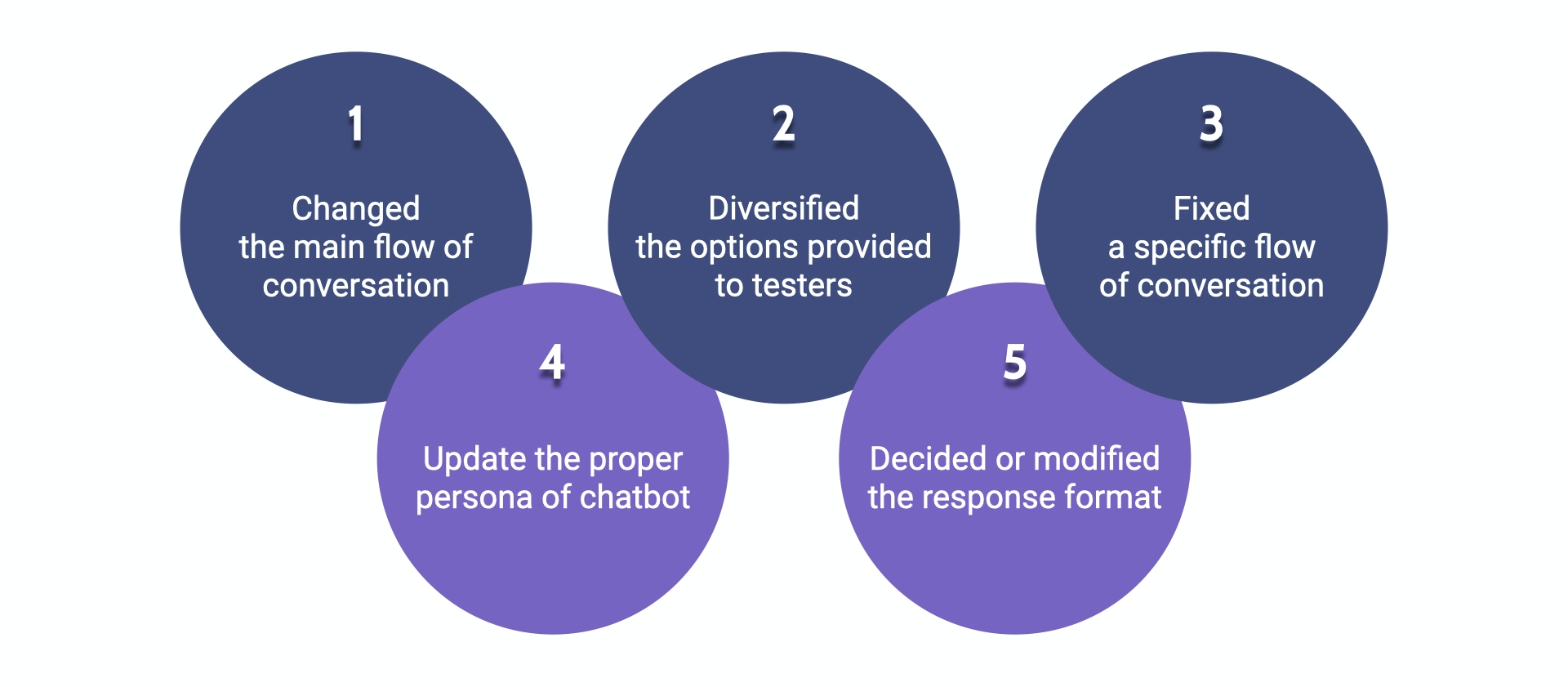

Designers revised their design by consulting diverse feedback and suggestions from the crowd. Revisions

occurred at both high level by changing the order of the conversation or low-level by adding options or

changing the tone of an utterance. Designers improved their conversation design with ProtoChat in five

major patterns.

For more information, please check out our paper! Or...

Publications

CSCW 2020

ProtoChat: Supporting the Conversation Design Process with Crowd Feedback

Yoonseo Choi, Toni-Jan Keith Monserrat, Jeongeon Park, Hyungyu Shin, Nyoungwoo Lee,

Juho Kim

CSCW 2020 Demo

ProtoChat: Supporting the Conversation Design Process with Crowd Feedback

Yoonseo Choi, Toni-Jan Keith Monserrat, Jeongeon Park, Hyungyu Shin, Nyoungwoo Lee,

Juho Kim

CUI2020

CHI 2020 Workshop on CUI@CHI

Leveraging the Crowd to Support the Conversation Design Process

Yoonseo Choi, Hyungyu Shin, Toni-Jan Keith Monserrat, Nyoungwoo Lee, Jeongeon Park,

Juho Kim

CHI 2020 LBW

Supporting an Iterative Conversation Design Process

Yoonseo Choi, Hyungyu Shin, Toni-Jan Keith Monserrat, Nyoungwoo Lee, Jeongeon Park,

Juho Kim

This work was supported by Samsung Research, Samsung Electronics Co., Ltd. (IO180410-05205-01).

Design InterfaceDesigners can draft, test, and deploy their conversation.

Design InterfaceDesigners can draft, test, and deploy their conversation. Crowd-testing interfaceCrowd worker can perform three kinds of interactions within testing phase.

Crowd-testing interfaceCrowd worker can perform three kinds of interactions within testing phase. Review interfaceDesigners can browse and analyze the data from the crowd-testing.

Review interfaceDesigners can browse and analyze the data from the crowd-testing.